Expected Surprisals

/1897 to 2011 : Winners v Losers - Leads, Scoring Shots and Conversion

/1897 to 2011 : Goals, Behinds, Scoring Accuracy & Winning Team Results By Quarter

/A Well-Calibrated Model

/Measures of Game Competitiveness

/Margins of Victory Across the Seasons

/A First Look At Surprisals for 2011

/Line Fund Profitability and Probability Scores

/The Calibration of the Head-to-Head Fund Algorithm

/All You Ever Wanted to Know About Favourite-Longshot Bias ...

/Previously, on at least a few occasions, I've looked at the topic of the Favourite-Longshot Bias and whether or not it exists in the TAB Sportsbet wagering markets for AFL.

A Favourite-Longshot Bias (FLB) is said to exist when favourites win at a rate in excess of their price-implied probability and longshots win at a rate less than their price-implied probability. So if, for example, teams priced at $10 - ignoring the vig for now - win at a rate of just 1 time in 15, this would be evidence for a bias against longshots. In addition, if teams priced at $1.10 won, say, 99% of the time, this would be evidence for a bias towards favourites.

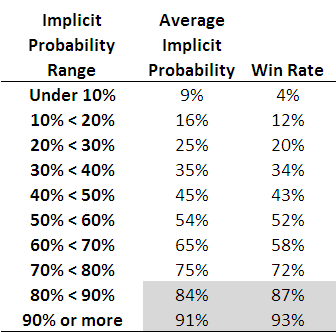

When I've considered this topic in the past I've generally produced tables such as the following, which are highly suggestive of the existence of such an FLB.

Each row of this table, which is based on all games from 2006 to the present, corresponds to the results for teams with price-implied probabilities in a given range. The first row, for example, is for all those teams whose price-implied probability was less than 10%. This equates, roughly, to teams priced at $9.50 or more. The average implied probability for these teams has been 9%, yet they've won at a rate of only 4%, less than one-half of their 'expected' rate of victory.

As you move down the table you need to arrive at the second-last row before you come to one where the win rate exceed the expected rate (ie the average implied probability). That's fairly compelling evidence for an FLB.

This empirical analysis is interesting as far as it goes, but we need a more rigorous statistical approach if we're to take it much further. And heck, one of the things I do for a living is build statistical models, so you'd think that by now I might have thrown such a model at the topic ...

A bit of poking around on the net uncovered this paper which proposes an eminently suitable modelling approach, using what are called conditional logit models.

In this formulation we seek to explain a team's winning rate purely as a function of (the natural log of) its price-implied probability. There's only one parameter to fit in such a model and its value tells us whether or not there's evidence for an FLB: if it's greater than 1 then there is evidence for an FLB, and the larger it is the more pronounced is the bias.

When we fit this model to the data for the period 2006 to 2010 the fitted value of the parameter is 1.06, which provides evidence for a moderate level of FLB. The following table gives you some idea of the size and nature of the bias.

The first row applies to those teams whose price-implied probability of victory is 10%. A fair-value price for such teams would be $10 but, with a 6% vig applied, these teams would carry a market price of around $9.40. The modelled win rate for these teams is just 9%, which is slightly less than their implied probability. So, even if you were able to bet on these teams at their fair-value price of $10, you'd lose money in the long run. Because, instead, you can only bet on them at $9.40 or thereabouts, in reality you lose even more - about 16c in the dollar, as the last column shows.

We need to move all the way down to the row for teams with 60% implied probabilities before we reach a row where the modelled win rate exceeds the implied probability. The excess is not, regrettably, enough to overcome the vig, which is why the rightmost entry for this row is also negative - as, indeed, it is for every other row underneath the 60% row.

Conclusion: there has been an FLB on the TAB Sportsbet market for AFL across the period 2006-2010, but it hasn't been generally exploitable (at least to level-stake wagering).

The modelling approach I've adopted also allows us to consider subsets of the data to see if there's any evidence for an FLB in those subsets.

I've looked firstly at the evidence for FLB considering just one season at a time, then considering only particular rounds across the five seasons.

So, there is evidence for an FLB for every season except 2007. For that season there's evidence of a reverse FLB, which means that longshots won more often than they were expected to and favourites won less often. In fact, in that season, the modelled success rate of teams with implied probabilities of 20% or less was sufficiently high to overcome the vig and make wagering on them a profitable strategy.

That year aside, 2010 has been the year with the smallest FLB. One way to interpret this is as evidence for an increasing level of sophistication in the TAB Sportsbet wagering market, from punters or the bookie, or both. Let's hope not.

Turning next to a consideration of portions of the season, we can see that there's tended to be a very mild reverse FLB through rounds 1 to 6, a mild to strong FLB across rounds 7 to 16, a mild reverse FLB for the last 6 rounds of the season and a huge FLB in the finals. There's a reminder in that for all punters: longshots rarely win finals.

Lastly, I considered a few more subsets, and found:

- No evidence of an FLB in games that are interstate clashes (fitted parameter = 0.994)

- Mild evidence of an FLB in games that are not interstate clashes (fitted parameter = 1.03)

- Mild to moderate evidence of an FLB in games where there is a home team (fitted parameter = 1.07)

- Mild to moderate evidence of a reverse FLB in games where there is no home team (fitted parameter = 0.945)

FLB: done.

Season 2010: An Assessment of Competitiveness

/For many, the allure of sport lies in its uncertainty. It's this instinct, surely, that motivated the creation of the annual player drafts and salary caps - the desire to ensure that teams don't become unbeatable, that "either team can win on the day".

Objective measures of the competitiveness of AFL can be made at any of three levels: teams' competition wins and losses, the outcome of a game, or the in-game trading of the lead.

With just a little pondering, I came up with the following measures of competitiveness at the three levels; I'm sure there are more.

We've looked at most - maybe all - of the Competition and Game level measures I've listed here in blogs or newsletters of previous seasons. I'll leave any revisiting of these measures for season 2010 as a topic for a future blog.

The in-game measures, though, are ones we've not explicitly explored, though I think I have commented on at least one occasion this year about the surprisingly high proportion of winning teams that have won 1st quarters and the low proportion of teams that have rallied to win after trailing at the final change.

As ever, history provides some context for my comments.

The red line in this chart records the season-by-season proportion of games in which the same team has led at every change. You can see that there's been a general rise in the proportion of such games from about 50% in the late seventies to the 61% we saw this year.

In recent history there have only been two seasons where the proportion of games led by the same team at every change has been higher: in 1995, when it was almost 64%, and in 1985 when it was a little over 62%. Before that you need to go back to 1925 to find a proportion that's higher than what we've seen in 2010.

The green, purple and blue lines track the proportion of games for which there were one, two, and the maximum possible three lead changes respectively. It's also interesting to note how the lead-change-at-every-change contest type has progressively disappeared into virtual non-existence over the last 50 seasons. This year we saw only three such contests, one of them (Fremantle v Geelong) in Round 3, and then no more until a pair of them (Fremantle v Geelong and Brisbane v Adelaide) in Round 20.

So we're getting fewer lead changes in games. When, exactly, are these lead changes not happening?

Pretty much everywhere, it seems, but especially between the ends of quarters 1 and 2.

The top line shows the proportion of games in which the team leading at half time differs from the team leading at quarter time (a statistic that, as for all the others in this chart, I've averaged over the preceding 10 years to iron out the fluctuations and better show the trend). It's been generally falling since the 1960s excepting a brief period of stability through the 1990s that recent seasons have ignored, the current season in particular during which it's been just 23%.

Next, the red line, which shows the proportion of games in which the team leading at three-quarter time differs from the team leading at half time. This statistic has declined across the period roughly covering the 1980s through to 2000, since which it has stabilised at about 20%.

The navy blue line shows the proportion of games in which the winning team differs from the team leading at three-quarter time. Its trajectory is similar to that of the red line, though it doesn't show the jaunty uptick in recent seasons that the red line does.

Finally, the dotted, light-blue line, which shows the overall proportion of quarters for which the team leading at one break was different from the team leading at the previous break. Its trend has been downwards since the 1960s though the rate of decline has slowed markedly since about 1990.

All told then, if your measure of AFL competitiveness is how often the lead changes from the end of one quarter to the next, you'd have to conclude that AFL games are gradually becoming less competitive.

It'll be interesting to see how the introduction of new teams over the next few seasons affects this measure of competitiveness.

A Competition of Two Halves

/In the previous blog I suggested that, based on winning percentages when facing finalists, the top 8 teams (well, actually the top 7) were of a different class to the other teams in the competition.

Current MARS Ratings provide further evidence for this schism. To put the size of the difference in an historical perspective, I thought it might be instructive to review the MARS Ratings of teams at a similar point in the season for each of the years 1999 to 2010.

(This also provides me an opportunity to showcase one of the capabilities - strip-charts - of a sparklines tool that can be downloaded for free and used with Excel.)

In the chart, each row relates the MARS Ratings that the 16 teams had as at the end of Round 22 in a particular season. Every strip in the chart corresponds to the Rating of a single team, and the relative position of that strip is based on the team's Rating - the further to the right the strip is, the higher the Rating.

The red strip in each row corresponds to a Rating of 1,000, which is always the average team Rating.

While the strips provide a visual guide to the spread of MARS Ratings for a particular season, the data in the columns at right offer another, more quantitative view. The first column is the average Rating of the 8 highest-rated teams, the middle column the average Rating of the 8 lowest-rated teams, and the right column is the difference between the two averages. Larger values in this right column indicate bigger differences in the MARS Ratings of teams rated highest compared to those rated lowest.

(I should note that the 8 highest-rated teams will not always be the 8 finalists, but the differences in the composition of these two sets of eight team don't appear to be material enough to prevent us from talking about them as if they were interchangeable.)

What we see immediately is that the difference in the average Rating of the top and bottom teams this year is the greatest that it's been during the period I've covered. Furthermore, the difference has come about because this year's top 8 has the highest-ever average Rating and this year's bottom 8 has the lowest-ever average Rating.

The season that produced the smallest difference in average Ratings was 1999, which was the year in which 3 teams finished just one game out of the eight and another finished just two games out. That season also produced the all-time lowest rated top 8 and highest rated bottom 8.

While we're on MARS Ratings and adopting an historical perspective (and creating sparklines), here's another chart, this one mapping the ladder and MARS performances of the 16 teams as at the end of the home-and-away seasons of 1999 to 2010.

One feature of this chart that's immediately obvious is the strong relationship between the trajectory of each team's MARS Rating history and its ladder fortunes, which is as it should be if the MARS Ratings mean anything at all.

Other aspects that I find interesting are the long-term decline of the Dons, the emergence of Collingwood, Geelong and St Kilda, and the precipitous rise and fall of the Eagles.

I'll finish this blog with one last chart, this one showing the MARS Ratings of the teams finishing in each of the 16 ladder positions across seasons 1999 to 2010.

As you'd expect - and as we saw in the previous chart on a team-by-team basis - lower ladder positions are generally associated with lower MARS Ratings.

But the "weather" (ie the results for any single year) is different from the "climate" (ie the overall correlation pattern). Put another way, for some teams in some years, ladder position and MARS Rating are measuring something different. Whether either, or neither, is measuring what it purports to -relative team quality - is a judgement I'll leave in the reader's hands.

A Line Betting Enigma

/Trialling The Super Smart Model

/Predicting Head-to-Head Market Prices

/Is the Home Ground Advantage Disappearing?

/Goalkicking Accuracy Across The Seasons

/Using a Ladder to See the Future

/The main role of the competition ladder is to provide a summary of the past. In this blog we'll be assessing what they can tell us about the future. Specifically, we'll be looking at what can be inferred about the make up of the finals by reviewing the competition ladder at different points of the season.

I'll be restricting my analysis to the seasons 1997-2009 (which sounds a bit like a special category for Einstein Factor, I know) as these seasons all had a final 8, twenty-two rounds and were contested by the same 16 teams - not that this last feature is particularly important.

Let's start by asking the question: for each season and on average how many of the teams in the top 8 at a given point in the season go on to play in the finals?

The first row of the table shows how many of the teams that were in the top 8 after the 1st round - that is, of the teams that won their first match of the season - went on to play in September. A chance result would be 4, and in 7 of the 13 seasons the actual number was higher than this. On average, just under 4.5 of the teams that were in the top 8 after 1 round went on to play in the finals.

This average number of teams from the current Top 8 making the final Top 8 grows steadily as we move through the rounds of the first half of the season, crossing 5 after Round 2, and 6 after Round 7. In other words, historically, three-quarters of the finalists have been determined after less than one-third of the season. The 7th team to play in the finals is generally not determined until Round 15, and even after 20 rounds there have still been changes in the finalists in 5 of the 13 seasons.

Last year is notable for the fact that the composition of the final 8 was revealed - not that we knew - at the end of Round 12 and this roster of teams changed only briefly, for Rounds 18 and 19, before solidifying for the rest of the season.

Next we ask a different question: if your team's in ladder position X after Y rounds where, on average, can you expect it to finish.

Regression to the mean is on abundant display in this table with teams in higher ladder positions tending to fall and those in lower positions tending to rise. That aside, one of the interesting features about this table for me is the extent to which teams in 1st at any given point do so much better than teams in 2nd at the same point. After Round 4, for example, the difference is 2.6 ladder positions.

Another phenomenon that caught my eye was the tendency for teams in 8th position to climb the ladder while those in 9th tend to fall, contrary to the overall tendency for regression to the mean already noted.

One final feature that I'll point out is what I'll call the Discouragement Effect (but might, more cynically and possibly accurately, have called it the Priority Pick Effect), which seems to afflict teams that are in last place after Round 5. On average, these teams climb only 2 places during the remainder of the season.

Averages, of course, can be misleading, so rather than looking at the average finishing ladder position, let's look at the proportion of times that a team in ladder position X after Y rounds goes on to make the final 8.

One immediately striking result from this table is the fact that the team that led the competition after 1 round - which will be the team that won with the largest ratio of points for to points against - went on to make the finals in 12 of the 13 seasons.

You can use this table to determine when a team is a lock or is no chance to make the final 8. For example, no team has made the final 8 from last place at the end of Round 5. Also, two teams as lowly ranked as 12th after 13 rounds have gone on to play in the finals, and one team that was ranked 12th after 17 rounds still made the September cut.

If your team is in 1st or 2nd place after 10 rounds you have history on your side for them making the top 8 and if they're higher than 4th after 16 rounds you can sport a similarly warm inner glow.

Lastly, if your aspirations for your team are for a top 4 finish here's the same table but with the percentages in terms of making the Top 4 not the Top 8.

Perhaps the most interesting fact to extract from this table is how unstable the Top 4 is. For example, even as late as the end of Round 21 only 62% of the teams in 4th spot have finished in the Top 4. In 2 of the 13 seasons a Top 4 spot has been grabbed by a team in 6th or 7th at the end of the penultimate round.

MARS Ratings of the Finalists

/We've had a cracking finals series so far and there's the prospect of even better to come. Two matches that stand out from what we've already witnessed are the Lions v Carlton and Collingwood v Adelaide games. A quick look at the Round 22 MARS ratings of these teams tells us just how evenly matched they were.

Glancing down to the bottom of the 2009 column tells us a bit more about the quality of this year's finalists.

As a group, their average rating is 1,020.8, which is the 3rd highest average rating since season 2000, behind only the averages for 2001 and 2003, and weighed down by the sub-1000 rating of the eighth-placed Dons.

At the top of the 8, the quality really stands out. The top 4 teams have the highest average rating for any season since 2000, and the top 5 teams are all rated 1,025 or higher, a characteristic also unique to 2009.

Someone from among that upper eschelon had to go out in the first 2 weeks and, as we now know, it was Adelaide, making them the highest MARS rated team to finish fifth at the end of the season.

(Adelaide aren't as unlucky as the Carlton side of 2001, however, who finished 6th with a MARS Rating of 1,037.9)