In the 18 Grand Finals to date that have involved the teams from 1st and 3rd, the minor premier has an 11-7 record, which represents a 61% success rate. This is only slightly better than the minor premiers' record against teams coming 2nd, which is 33-23 or about 59%.

Overall, the minor premiers have missed only 13 of the Grand Finals and have won 62% of those they've been in.

By comparison, teams finishing 2nd have appeared in 68 Grand Finals (61%) and won 44% of them. In only 12 of those 68 appearances have they faced a team from lower on the ladder; their record for these games is 7-5, or 58%.

Teams from 3rd and 4th positions have each made about the same number of appearances, winning a spot about 1 year in 4. Whilst their rates of appearance are very similar, their success rates are vastly different, with teams from 3rd winning 46% of the Grand Finals they've made, and those from 4th winning only 27% of them.

That means that teams from 3rd have a better record than teams from 2nd, largely because teams from 3rd have faced teams other than the minor premier in 25% of their Grand Final appearances whereas teams from 2nd have found themselves in this situation for only 18% of their Grand Final appearances.

Ladder positions 5 and 6 have provided only 6 Grand Finalists between them, and only 2 Flags. Surprisingly, both wins have been against minor premiers - in 1998, when 5th-placed Adelaide beat North Melbourne, and in 1900 when 6th-placed Melbourne defeated Fitzroy. (Note that the finals systems have, especially in the early days of footy, been fairly complex, so not all 6ths are created equal.)

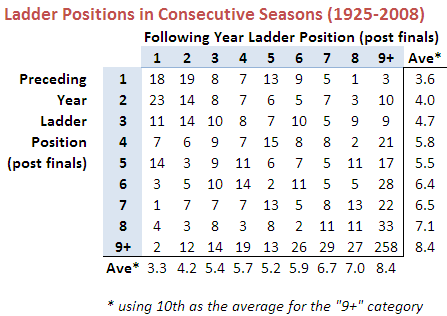

One conclusion I'd draw from the table above is that ladder position is important, but only mildly so, in predicting the winner of the Grand Final. For example, only 69 of the 111 Grand Finals, or about 62%, have been won by the team finishing higher on the ladder.

It turns out that ladder position - or, more correctly, the difference in ladder position between the two grand finalists - is also a very poor predictor of the margin in the Grand Final.