Picking the High-Scoring Game

/Optimising the Wager: Yet More Custom Metrics in Formulize

/What's Easier - Predicting the Home or the Away Team Score?

/Specialist Margin Prediction: Epsilon Insensitive Loss Functions

/Specialist Margin Prediction: "Bathtub" Loss Functions

/An Empirical Review of the Favourite In-Running Model

/Hanging Onto a Favourite: Assessing a Favourite's In-Running Chances of Victory

/The Drivers of Overround

/Can We Do Better Than The Binary Logit?

/Why It Matters Which Team Wins

/Adding Some Spline to Your Models

/Creating the recent blog on predicting the Grand Final margin based on the difference in the teams' MARS Ratings set me off once again down the path of building simple models to predict game margin.

It usually doesn't take much.

Firstly, here's a simple linear model using MARS Ratings differences that repeats what I did for that recent blog post but uses every game since 1999, not just Grand Finals.

It suggests that you can predict game margins - from the viewpoint of the home team - by completing the following steps:

- subtract the away team's MARS Rating from the home team's MARS Rating

- multiply this difference by 0.736

- add 9.871 to the result you get in 2.

One interesting feature of this model is that it suggests that home ground advantage is worth about 10 points.

The R-squared number that appears on the chart tells you that this model explains 21.1% of the variability is game margins.

You might recall we've found previously that we can do better than this by using the home team's victory probability implied by its head-to-head price.

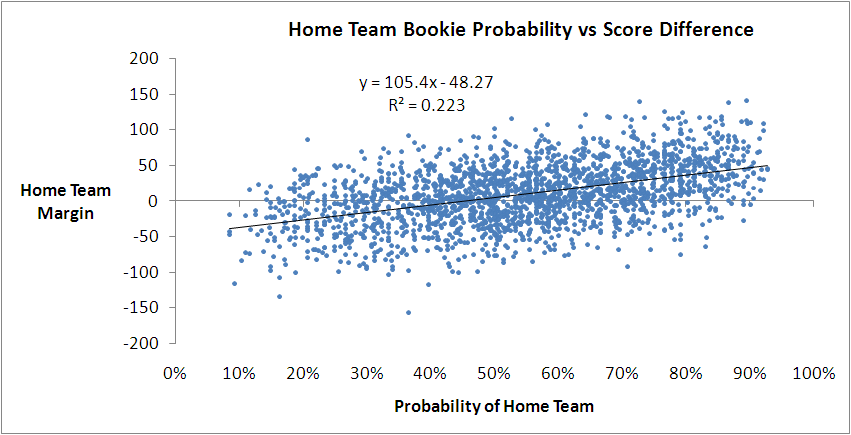

This model says that you can predict the home team margin by multiplying its implicit probability by 105.4 and then subtracting 48.27. It explains 22.3% of the observed variability in game margins, or a little over 1% more than we can explain with the simple model based on MARS Ratings.

With this model we can obtain another estimate of the home team advantage by forecasting the margin with a home team probability of 50%. That gives an estimate of 4.4 points, which is much smaller than we obtained with the MARS-based model earlier.

(EDIT: On reflection, I should have been clearer about the relative interpretation of this estimate of home ground advantage in comparison to that from the MARS Rating based model above. They're not measuring the same thing.

The earlier estimate of about 10 points is a more natural estimate of home ground advantage. It's an estimate of how many more points a home team can be expected to score than an away team of equal quality based on MARS Rating, since the MARS Rating of a team for a particular game does not include any allowance for whether or not it's playing at home or away.

In comparison, this latest estimate of 4.4 points is a measure of the "unexpected" home ground advantage that has historically accrued to home teams, over-and-above the advantage that's already built into the bookie's probabilities. It's a measure of how many more points home teams have scored than away teams when the bookie has rated both teams as even money chances, taking into account the fact that one of the teams is (possibly) at home.

It's entirely possible that the true home ground advantage is about 10 points and that, historically, the bookie has priced only about 5 or 6 points into the head-to-head prices, leaving the excess of 4.4 that we're seeing. In fact, this is, if memory serves me, consistent with earlier analyses that suggested home teams have been receiving an unwarranted benefit of about 2 points per game on line betting.

Which, again, is why MAFL wagers on home teams.)

Perhaps we can transform the probability variable and explain even more of the variability in game margins.

In another earlier blog we found that the handicap a team received could be explained by using what's called the logit transformation of the bookie's probability, which is ln(Prob/(1-Prob)).

Let's try that.

We do see some improvement in the fit, but it's only another 0.2% to 22.5%. Once again we can estimate home ground advantage by evaluating this model with a probability of 50%. That gives us 4.4 points, the same as we obtained with the previous bookie-probability based model.

A quick model-fitting analysis of the data in Eureqa gives us one more transformation to try: exp(Prob). Here's how that works out:

We explain another 0.1% of the variability with this model as we inch our way to 22.6%. With this model the estimated home-ground advantage is 2.6 points, which is the lowest we've seen so far.

If you look closely at the first model we built using bookie probabilities you'll notice that there seems to be more points above the fitted line than below it for probabilities from somewhere around 60% onwards.

Statistically, there are various ways that we could deal with this, one of which is by using Multivariate Adaptive Regression Splines.

(The algorithm in R - the statistical package that I use for most of my analysis - with which I created my MARS models is called earth since, for legal reasons, it can't be called MARS. There is, however, another R package that also creates MARS models, albeit in a different format. The maintainer of the earth package couldn't resist the temptation not to call the function that converts from one model format to the other mars.to.earth. Nice.)

The benefit that MARS models bring us is the ability to incorporate 'kinks' in the model and to let the data determine how many such kinks to incorporate and where to place them.

Running earth on the bookie probability and margin data gives the following model:

Predicted Margin = 20.7799 + if(Prob > 0.6898155, 162.37738 x (Prob - 0.6898155),0) + if(Prob < 0.6898155, -91.86478 x (0.6898155 - Prob),0)

This is a model with one kink at a probability of around 69%, and it does a slightly better job at explaining the variability in game margins: it gives us an R-squared of 22.7%.

When you overlay it on the actual data, it looks like this.

You can see the model's distinctive kink in the diagram, by virtue of which it seems to do a better job of dissecting the data for games with higher probabilities.

It's hard to keep all of these models based on bookie probability in our head, so let's bring them together by charting their predictions for a range of bookie probabilities.

For probabilities between about 30% and 70%, which approximately equates to prices in the $1.35 to $3.15 range, all four models give roughly the same margin prediction for a given bookie probability. They differ, however, outside that range of probabilities, by up to 10-15 points. Since only about 37% of games have bookie probabilities in this range, none of the models is penalised too heavily for producing errant margin forecasts for these probability values.

So far then, the best model we've produced has used only bookie probability and a MARS modelling approach.

Let's finish by adding the other MARS back into the equation - my MARS Ratings, which bear no resemblance to the MARS algorithm, and just happen to share a name. A bit like John Howard and John Howard.

This gives us the following model:

Predicted Margin = 14.487934 + if(Prob > 0.6898155, 78.090701 x (Prob - 0.6898155),0) + if(Prob < 0.6898155, -75.579198 x (0.6898155 - Prob),0) + if(MARS_Diff < -7.29, 0, 0.399591 x (MARS_Diff + 7.29)

The model described by this equation is kinked with respect to bookie probability in much the same way as the previous model. There's a single kink located at the same probability, though the slope to the left and right of the kink is smaller in this latest model.

There's also a kink for the MARS Rating variable (which I've called MARS_Diff here), but it's a kink of a different kind. For MARS Ratings differences below -7.29 Ratings points - that is, where the home team is rated 7.29 Ratings points or more below the away team - the contribution of the Ratings difference to the predicted margin is 0. Then, for every 1 Rating point increase in the difference above -7.29, the predicted margin goes up by about 0.4 points.

This final model, which I think can still legitimately be called a simple one, has an R-squared of 23.5%. That's a further increase of 0.8%, which can loosely be thought of as the contribution of MARS Ratings to the explanation of game margins over and above that which can be explained by the bookie's probability assessment of the home team's chances.

All You Ever Wanted to Know About Favourite-Longshot Bias ...

/Previously, on at least a few occasions, I've looked at the topic of the Favourite-Longshot Bias and whether or not it exists in the TAB Sportsbet wagering markets for AFL.

A Favourite-Longshot Bias (FLB) is said to exist when favourites win at a rate in excess of their price-implied probability and longshots win at a rate less than their price-implied probability. So if, for example, teams priced at $10 - ignoring the vig for now - win at a rate of just 1 time in 15, this would be evidence for a bias against longshots. In addition, if teams priced at $1.10 won, say, 99% of the time, this would be evidence for a bias towards favourites.

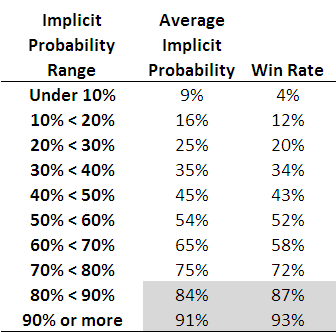

When I've considered this topic in the past I've generally produced tables such as the following, which are highly suggestive of the existence of such an FLB.

Each row of this table, which is based on all games from 2006 to the present, corresponds to the results for teams with price-implied probabilities in a given range. The first row, for example, is for all those teams whose price-implied probability was less than 10%. This equates, roughly, to teams priced at $9.50 or more. The average implied probability for these teams has been 9%, yet they've won at a rate of only 4%, less than one-half of their 'expected' rate of victory.

As you move down the table you need to arrive at the second-last row before you come to one where the win rate exceed the expected rate (ie the average implied probability). That's fairly compelling evidence for an FLB.

This empirical analysis is interesting as far as it goes, but we need a more rigorous statistical approach if we're to take it much further. And heck, one of the things I do for a living is build statistical models, so you'd think that by now I might have thrown such a model at the topic ...

A bit of poking around on the net uncovered this paper which proposes an eminently suitable modelling approach, using what are called conditional logit models.

In this formulation we seek to explain a team's winning rate purely as a function of (the natural log of) its price-implied probability. There's only one parameter to fit in such a model and its value tells us whether or not there's evidence for an FLB: if it's greater than 1 then there is evidence for an FLB, and the larger it is the more pronounced is the bias.

When we fit this model to the data for the period 2006 to 2010 the fitted value of the parameter is 1.06, which provides evidence for a moderate level of FLB. The following table gives you some idea of the size and nature of the bias.

The first row applies to those teams whose price-implied probability of victory is 10%. A fair-value price for such teams would be $10 but, with a 6% vig applied, these teams would carry a market price of around $9.40. The modelled win rate for these teams is just 9%, which is slightly less than their implied probability. So, even if you were able to bet on these teams at their fair-value price of $10, you'd lose money in the long run. Because, instead, you can only bet on them at $9.40 or thereabouts, in reality you lose even more - about 16c in the dollar, as the last column shows.

We need to move all the way down to the row for teams with 60% implied probabilities before we reach a row where the modelled win rate exceeds the implied probability. The excess is not, regrettably, enough to overcome the vig, which is why the rightmost entry for this row is also negative - as, indeed, it is for every other row underneath the 60% row.

Conclusion: there has been an FLB on the TAB Sportsbet market for AFL across the period 2006-2010, but it hasn't been generally exploitable (at least to level-stake wagering).

The modelling approach I've adopted also allows us to consider subsets of the data to see if there's any evidence for an FLB in those subsets.

I've looked firstly at the evidence for FLB considering just one season at a time, then considering only particular rounds across the five seasons.

So, there is evidence for an FLB for every season except 2007. For that season there's evidence of a reverse FLB, which means that longshots won more often than they were expected to and favourites won less often. In fact, in that season, the modelled success rate of teams with implied probabilities of 20% or less was sufficiently high to overcome the vig and make wagering on them a profitable strategy.

That year aside, 2010 has been the year with the smallest FLB. One way to interpret this is as evidence for an increasing level of sophistication in the TAB Sportsbet wagering market, from punters or the bookie, or both. Let's hope not.

Turning next to a consideration of portions of the season, we can see that there's tended to be a very mild reverse FLB through rounds 1 to 6, a mild to strong FLB across rounds 7 to 16, a mild reverse FLB for the last 6 rounds of the season and a huge FLB in the finals. There's a reminder in that for all punters: longshots rarely win finals.

Lastly, I considered a few more subsets, and found:

- No evidence of an FLB in games that are interstate clashes (fitted parameter = 0.994)

- Mild evidence of an FLB in games that are not interstate clashes (fitted parameter = 1.03)

- Mild to moderate evidence of an FLB in games where there is a home team (fitted parameter = 1.07)

- Mild to moderate evidence of a reverse FLB in games where there is no home team (fitted parameter = 0.945)

FLB: done.