Does The Favourite Have It Covered?

/You've wagered on Geelong - a line bet in which you've given 46.5 points start - and they lead by 42 points at three-quarter time. What price should you accept from someone wanting to purchase your wager? They also led by 44 points at quarter time and 43 points at half time. What prices should you have accepted then?

In this blog I've analysed line betting results since 2006 and derived three models to answer questions similar the one above. These models take as inputs the handicap offered by the favourite and the favourite's margin relative to that handicap at a particular quarter break. The output they provide is the probability that the favourite will go on to cover the spread given the situation they find themselves in at the end of some quarter.

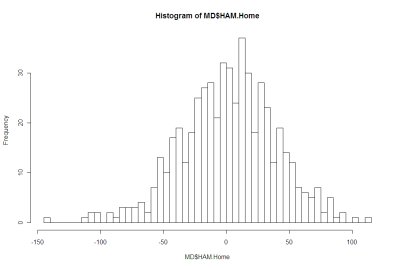

The chart below plots these probabilities against margins relative to the spread at quarter time for 8 different handicap levels.

Negative margins mean that the favourite has already covered the spread, positive margins that there's still some spread to be covered.

The top line tracks the probability that a 47.5 point favourite covers the spread given different margins relative to the spread at quarter time. So, for example, if the favourite has the spread covered by 5.5 points (ie leads by 53 points) at quarter time, there's a 90% chance that the favourite will go on to cover the spread at full time.

In comparison, the bottom line tracks the probability that a 6.5 point favourite covers the spread given different margins relative to the spread at quarter time. If a favourite such as this has the spread covered by 5.5 points (ie leads by 12 points) at quarter time, there's only a 60% chance that this team will go on to cover the spread at full time. The logic of this is that a 6.5 point favourite is, relatively, less strong than a 47.5 point favourite and so more liable to fail to cover the spread for any given margin relative to the spread at quarter time.

Another way to look at this same data is to create a table showing what margin relative to the spread is required for an X-point favourite to have a given probability of covering the spread.

So, for example, for the chances of covering the spread to be even, a 6.5 point favourite can afford to lead by only 4 or 5 (ie be 2 points short of covering) at quarter time and a 47.5 point favourite can afford to lead by only 8 or 9 (ie be 39 points short of covering).

The following diagrams provide the same chart and table for the favourite's position at half time.

Finally, these next diagrams provide the same chart and table for the favourite's position at three-quarter time.

I find this last table especially interesting as it shows how fine the difference is at three-quarter time between likely success and possible failure in terms of covering the spread. The difference between a 50% and a 75% probability of covering is only about 9 points and between a 75% and a 90% probability is only 9 points more.

To finish then, let's go back to the question with which I started this blog. A 46.5 point favourite leading by 42 points at three-quarter time is about a 69.4% chance to go on and cover. So, assuming you backed the favourite at $1.90 your expected payout for a 1 unit wager is 0.694 x 0.9 - 0.306 = +0.32 units. So, you'd want to be paid 1.32 units for your wager, given that you also want your original stake back too.

A 46.5 point favourite leading by 44 points at quarter time is about an 85.5% chance to go on and cover, and a similar favourite leading by 43 points at half time is about an 84.7% chance to go on to cover. The expected payouts for these are +0.62 and +0.61 units respectively, so you'd have wanted about 1.62 units to surrender these bets (a little more if you're a risk-taker and a little less if you're risk-averse, but that's a topic for another day ...)