Introducing ChiPS

/In years past, the MAFL Fund, Tipping and Prediction algorithms have undergone significant revision during the off-season, partly in reaction to their poor performances but partly also because of my fascination - some might call it obsession - with the empirical testing of new-to-me analytic and modelling techniques. Whilst that's been enjoyable for me, I imagine that it's made MAFL frustrating and difficult to follow at times.

So, this year is different. I've decided to make only two changes to the MAFL algorithms and Funds for season 2014: a reweighting of the three constituent Funds in the Recommended Portfolio and the introduction of two new Predictors, one a Margin Predictor and the other a Probability Predictor.

Those Fund reweightings have not yet been fully determined, but they will almost certainly involve an upweighting of the Margin Fund and, obviously, downweightings of at least one of the other two Funds. I'll post the final weightings at a time nearer the start of the season.

The new Predictors have, however, been finalised and it's in this blog that I'll be describing their creation.

ChiPS: The Chi Predictive System

The Chi Predictive System is named in honour of the inaugural MAFL Mascot, Chi, who unfortunately saw his last Grand Final in 2013. ChiPS' development draws heavily on the work I've been blogging about recently relating to the Very Simple Rating System (VSRS), an ELO-style rating system that can be used to determine AFL team ratings on the basis of game outcomes and game venues. As I've written before, both here on MAFL and on other blogs, Chi wasn't noted for his intellect, so honouring his memory with something based on the VSRS seems entirely apt.

Andrew's recent post suggested a relatively simple but clever variation to the VSRS in which the value of k, used to update team ratings on the basis of the size of the difference between an actual and expected game outcome, is allowed to vary at different points in the season. The intuition for this is (at least) twofold:

- teams' ratings are more difficult to ascertain at the start of the season than at other times. Empirical evidence suggests that team class persists across off-seasons, but not fully and with considerable variability. By allowing for larger values of k in early rounds of a season we can more rapidly adjust team ratings to reflect actual results and demonstrated ability, and to dilute the impact of historical performances.

- actual results are likely to be better reflections of relative team abilities to different extents at different points in the season. For example, games played late in the season involving teams with genuine Finals aspirations are likely to reveal more of the latent class of both teams.

Drawing on this insight, ChiPS is powered by a VSRS with intra-seasonally variable k.

Another difference between ChiPS and the VSRS' I've described previously is the equation that ChiPS uses to determine the Expected Margin in any game.

Where VSRS simply assumes that the expected margin of victory by the home team is the amount by which its Ratings differs from its opponent's plus some home ground advantage, ChiPS incorporates relative team form (measured as the change in team Ratings over the previous two games within the current season) and the Interstate Status of the contest.

ChiPS also recognises that HGA varies widely by venue and team and so includes separate parameters for all reasonably common combinations of home team and venue as measured over the past 15 seasons.An additional HGA parameter is used for all games where the home team is playing at a relatively unfamiliar venue (for example, the Roos at Manuka Oval).

Other things to note about these basic equations are that:

- The initial ratings of 1,000 were applied to all teams as at the start of season 1999.

- Team form is set to zero for the first two rounds of any season.

- The carryover parameter, C, is constrained to lie in the (0,1) interval.

- All the equations are written from the viewpoint of the home team, which is determined as being the AFL-designated team in all regular season games and the higher-Rated team in all Finals. (I'm still not entirely convinced of my decision to base home team status in Finals on Ratings alone, but a more nuanced view will need to wait for another day/week/season).

The Probability Predictor in ChiPS, C-Prob, takes the predicted margin from C-Marg and converts it to an estimated victory probability for the home team by assuming that it was drawn from a Normal distribution with zero mean and some fixed sigma, to be chosen optimally.

In the version of ChiPS to be used this year in MAFL, C-Marg's tuning parameters have been chosen to minimise the mean absolute error across all games from 1999 to 2013 (ie the absolute difference between the outputs of equations (4) and (3) above), and C-Prob's sigma has been chosen to maximise the sum of the log probability scores across that same period.

Optimal ChiPS Parameters

I've once again, for better or for worse - more probably the latter - relied on Excel's Solver add-in to determine the optimal values of the tuning parameters for C-Marg and C-Prob.

On the left is a table in which are recorded those estimated optima.

The values of k1 through k5 reveal that, as expected, results from games in the early parts of seasons carry a great deal of new information about the relative merits of the participants relative to games played later in the season. This we can infer from the value of 9.72% for k1, which is about 50% larger than the values of k2 and k3.

To put those parameters in context, consider a game played between two teams Rated 1,000, each playing in their home States at a venue where the HGA is 5 points. Now, assume that the home team won by 40 points. If that game took place in Round 1 it would alter the home team's Rating to 1,000 + 0.0972 x (40 - 5), or about 1,003.4, but if, instead, it occurred in Round 7, the home team's resultant Rating would be only 1,002.2.

Similarly, the relative importance of games late in the home and away season is reflected in the large value of k4, and the relative unimportance - at least, from a team rating viewpoint - of Finals is reflected in the small value of k5. A victory in a Grand Final by 100 points would lead to a change in the participating teams' Ratings of only a single Rating Point or so.

According to the estimated optimum value of C, the carryover parameter, teams bring with them about 60% of the excess class, positive or negative, they'd accumulated by the end of the previous season. A team Rated 1,050 at the end of a season would, therefore, start a new season Rated 1,030, whereas one Rated 950 as they mad-Mondayed would greet the new year Rated 970.

Differences in form contribute, on average, about 2.7 points to the expected margin of an AFL game, based on the optimal coefficient of 0.653 shown here and on the all-season all-game average difference in form between opponents in any game of about 4.1 Rating Points. Interstate Status alone contributes,at its maximum, more than twice that much, with home teams playing an interstate rival enjoying an advantage in addition to their HGA of almost an entire goal.

As we've seen in previous blogs, HGAs vary markedly across team and venue combinations.

Geelong, at Docklands, have the best of it, basking in about a 4-goal advantage at that venue when playing as the home team. St Kilda also enjoy a significant points premium when playing at that venue, generating a little over 16 points more than we might otherwise expect when doing so. In contrast, the Pies would rather play their home games at venues other than Docklands: they've given up, on average, about 9 points start to their opponents when playing there as the home team against a Victorian opponent.

More generally, and as we'd expect, most teams have enjoyed at least a small advantage of between about 2 and 10 points when playing at home. The counterexamples have been Carlton, the Roos, Richmond and the Dogs at Docklands, and Collingwood and Richmond at the G: the last decade and a bit has truly been a bad time to be a Victorian team playing at home.

In-Sample ChiPS Performance

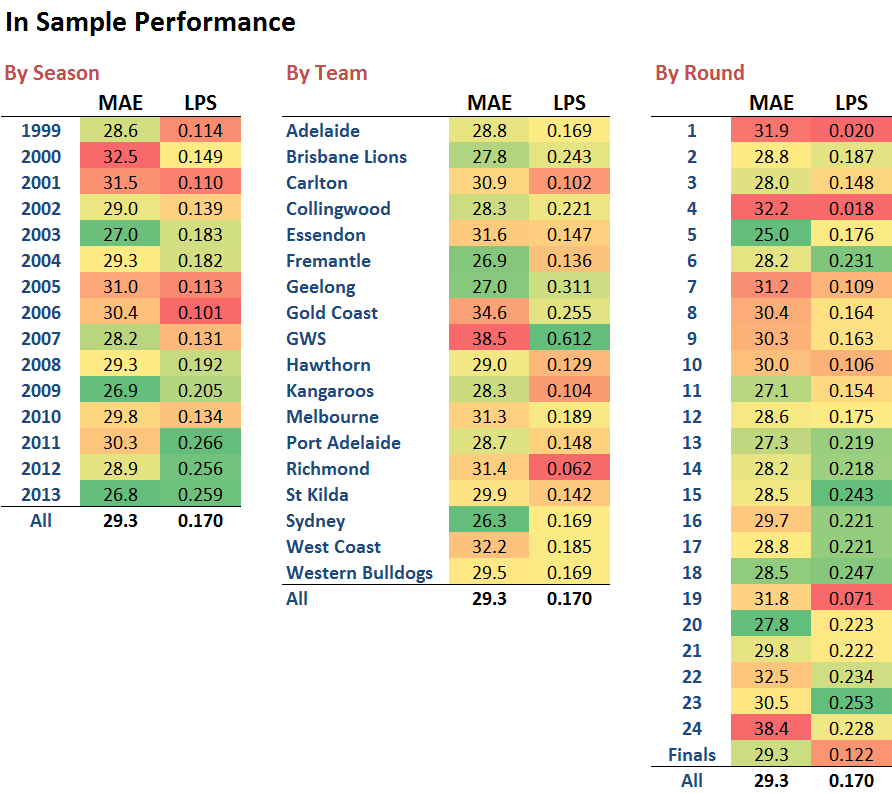

Employing these optimised parameter values for seasons 1999 to 2013 we find that ChiPS' overall performance has been most impressive in the most recent seasons, especially in the last three seasons if LPS is your metric of choice.

The 2013 MAE result would have been good enough to earn C-Marg 3rd place, and the LPS result would have left C-Prob in roughly equal-1st on the relevant MAFL Leaderboards. Furthermore, in 2012 C-Marg would have topped the MAFL Leaderboard while C-Prob would have finished 3rd. They're impressive results indeed. Assessing the performance of any Predictor in-sample is, however, always fraught, and the current situation is no different.

What gives me some solace though is that the ChiPS parameters have been optimised not for a single season but for the entirety of the 15 seasons from 1999 to 2013. There's only so much that a System optimised over 2,800 games can bend and shape itself to the most-recent 300 or so. Still, only actual post-sample performance will be a true measure of ChiPS' merits.

On a team-by-team basis we find that C-Marg has struggled most of all in terms of predicting the ultimate margin in games where GWS or Gold Coast are the home team. In these games, C-Marg has been in error by about 6 goals. It's also been relatively less effective in predicting the margin in games where Carlton, Essendon, Richmond or West Coast have been the home team, and relatively more effective when Brisbane, Fremantle, Geelong or Sydney have been the designated home team.

From a Probability Prediction viewpoint, C-Prob has struggled most with the unpredictability of Carlton. the Roos and, in particular, Richmond, and has been most well-calibrated in relation to GWS, Geelong, Gold Coast and the Brisbane Lions.

Moving, lastly to a round-by-round view we find that C-Marg delivers largest absolute errors mostly in the 1st half of the season, especially in the first four rounds. C-Prob also struggles in this same portion of the early season.

C-Marg excels, however, from about Round 11 to Round 20, while C-Prob is strongest from about Round 13 to Round 23.

Conclusion

ChiPS has, I think, earned itself a place in MAFL 2014. For sentiments' sake - surely amongst the worst possible bases on which to be making wagers - I'll probably be outlaying some non-MAFL Fund money on C-Prob's prognostications this year. Chi's not got a stellar record as punter or as tipster, however, so it's best that I go it alone ...