Estimating Forecaster Calibration of Pre-Game Probability Estimates

/In the previous post we looked at the calibration of two in-running probability models across the entire span of the contest. For one of those models I used a bookmaker's pre-game head-to-head prices to establish credible pre-game assessments of teams' chances.

Whenever we convert bookmaker prices into probabilities, we have a decision to make about how to unwind the overround in them. In the past, I've presented (at least) three plausible methods for doing this:

- Overround equalising - the "traditional" approach, which assumes that overround is imbedded in both the home and the away team prices equally

- Risk equalising - which assumes that overround is levied in such a way to protect the bookmaker against underestimation of the true chances of either team by some fixed percentage point amount (see this blog for details)

- Log-Probability Score Optimising - which adopts a purely empirical stance and determines the optimal fixed percentage adjustment to the inverse of the home team's price such that the log probability score of the resulting probabilities is maximised (see this blog for details)

These methods yield the following equations:

- Overround Equalising: Prob(Home win) = Away Price / (Home Price + Away Price)

- Risk Equalising: Prob(Home win) = 1/Home Price - (1 /Home Price + 1/Away Price - 1)/2

- LPS Optimising: Prob(Home win) = 1/Home Price - 1.0281%

In all cases, Prob(Away win) = 1 - Prob(Home win), as we ignore the usually roughly 1% or so chance of a draw.

For the previous blog, I simply chose to adopt the overround equalising method for my purposes. The question for this blog is how well calibrated, empirically, are these three approaches solely at the start of the game across all games from the period from 2008 to 2016, and how do they compare to the probability estimates we can derive from MoSSBODS and MoSHBODS.

To make that assessment we'll proceed as we did in the previous blog, first establishing probability bins and then estimating the winning rate of home teams within each bin. For this purpose I've created 11 bins, each of which contains roughly the same number of forecasts across the five forecasters.

The calibration chart that results is as below:

Recall that a well-calibrated forecaster will see teams win about X% of the time when he or she rates them X% chances, so the closer are the points in the chart above to the dotted line, the better the forecaster.

Some observations on the results:

- Most of the forecasters tend to be too pessimistic about home team underdogs, which win, for example, about 30% of the time when they're assessed by the forecasters as about 25% chances

- MoSHBODS is something of an exception to this, but remains slightly too pessimistic

- Calibration levels are generally quite good in the 45 to 60% range

- MoSHBODS is generally too pessimistic about home teams that it rates as 60% or better chances

- This is also true to some extent for MoSSBODS, but less so

- On balance, across the entire range of feasible forecasts, every forecaster except TAB-LPSO tends to underrate home team chances.

- Among the three TAB-based forecasters, TAB-OE does better than TAB-RE for probability forecasts below about 40%, and better than TAB-LPSO for probability forecasts in the 30 to 75% range. Overall though, TAB-LPSO looks marginally the best of the three (despite the fact that the 1.0281% adjustment was determined a few years ago).

- MoSHBODS is, generally, better calibrated than MoSSBODS for probability forecasts below about 50%, while MoSSBODS is generally better calibrated for probability forecasts above about 50%, and especially for those above about 75%

Across all forecasts, the forecasters demonstrate fairly similar levels of calibration - a conclusion borne out by their Brier Scores for these games (recall that lower scores are better):

- MoSHBODS: 0.1850 (Rank 3)

- MoSSBODS: 0.1849 (Rank 2)

- TAB-OE: 0.1851 (Rank 5)

- TAB-RE: 0.1850 (Rank 4)

- TAB-LPSO: 0.1842 (Rank 1)

The Log Probability Scores - which I define as 1+the standard definition to avoid zero being best - tell a similar story (recall that higher scores are better):

- MoSHBODS: 0.2122 (Rank 4)

- MoSSBODS: 0.2137 (Rank 2)

- TAB-OE: 0.2118 (Rank 5)

- TAB-RE: 0.2131 (Rank 3)

- TAB-LPSO: 0.2169 (Rank 1)

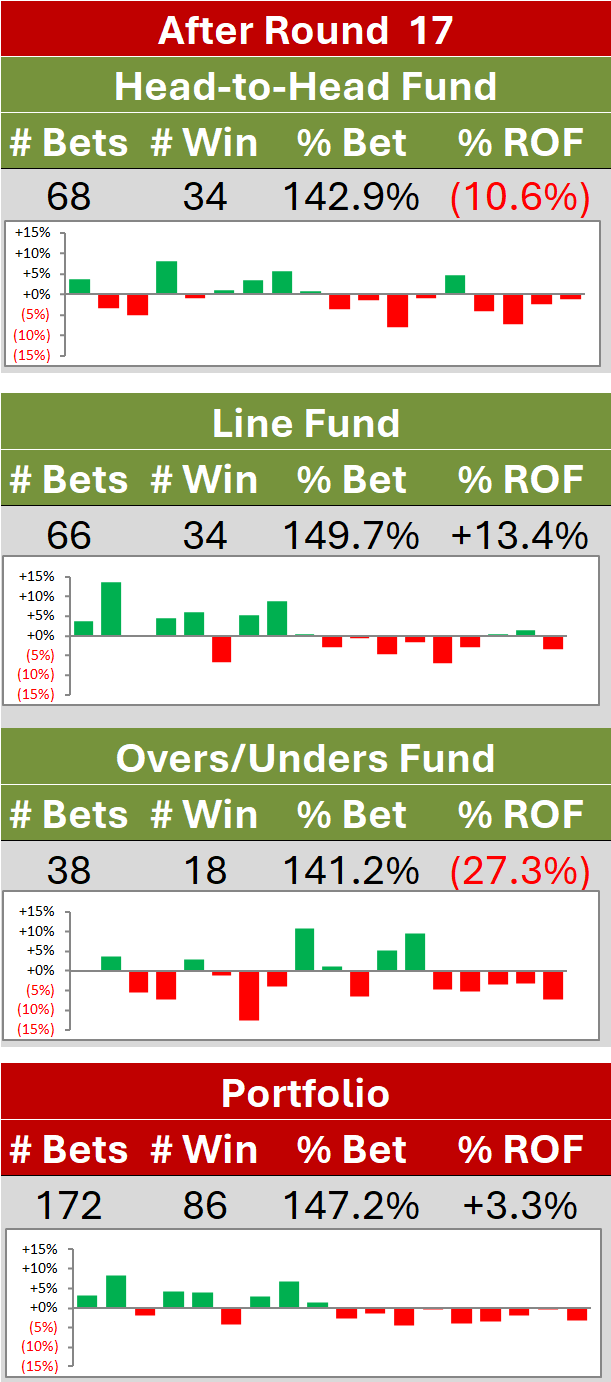

All of which gives me some pause that I'm using MoSHBODS rather than MoSSBODS to power the Head-to-Head Fund this year, but the empirical data does suggest slightly superior returns to using MoSHBODS, so I'll not be reversing that decision. At least, for now ...